Hi all, on my laptop I’ve two nvme, one of 2Tb with linux-zfs, and one of 1Tb with freebsd. Also I’ve one external nvme on a case that I’ve formatted on zfs for using how backup.

This in my situationmarco@gentsar ~ $ zpool list -v NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT backup 928G 302G 626G - - 0% 32% 1.00x ONLINE - sda2 931G 302G 626G - - 0% 32.5% - ONLINE lpool 1.80T 292G 1.51T - - 0% 15% 1.00x ONLINE - nvme-MSI_M480_PRO_2TB_511240829130000340-part3 1.80T 292G 1.51T - - 0% 15.9% - ONLINE marco@gentsar ~ $

marco@gentsar ~ $ zfs list

NAME USED AVAIL REFER MOUNTPOINT

backup 302G 597G 384K /backup

backup/bsd 9.62G 597G 384K none

backup/bsd/freebsd-home 384K 597G 384K none

backup/bsd/freebsd-home-marco 503M 597G 503M none

backup/bsd/freebsd-root 9.13G 597G 9.13G none

backup/condivise 180G 597G 180G /backup/condivise

backup/linux 113G 597G 384K none

backup/linux/arch-home 23.9G 597G 23.9G none

backup/linux/arch-root 21.0G 597G 21.0G none

backup/linux/chimera-home 1.67G 597G 1.67G none

backup/linux/chimera-root 13.7G 597G 13.7G none

backup/linux/gentoo-root 31.6G 597G 31.6G none

backup/linux/void-home 9.43G 597G 9.43G none

backup/linux/void-root 11.4G 597G 11.4G none

lpool 293G 1.46T 96K none

lpool/condivise 178G 1.46T 178G legacy

lpool/home 46.2G 1.46T 96K none

lpool/home/arch 22.7G 1.46T 19.9G legacy

lpool/home/chimera 2.23G 1.46T 1.23G legacy

lpool/home/fedora 96K 1.46T 96K none

lpool/home/gentoo 11.9G 1.46T 10.3G legacy

lpool/home/void 9.39G 1.46T 5.37G legacy

lpool/root 68.7G 1.46T 96K none

lpool/root/arch 20.4G 1.46T 16.6G /

lpool/root/chimera 15.2G 1.46T 9.61G /

lpool/root/gentoo 24.5G 1.46T 20.6G /

lpool/root/void 8.54G 1.46T 7.98G /

for snapshot I’m using zrepl and follow its site I created this config:

marco@gentsar ~ $ cat /etc/zrepl/zrepl.yml

# This config serves as an example for a local zrepl installation that

# backups the entire zpool `system` to `backuppool/zrepl/sink`

#

# The requirements covered by this setup are described in the zrepl documentation's

# quick start section which inlines this example.

#

# CUSTOMIZATIONS YOU WILL LIKELY WANT TO APPLY:

# - adjust the name of the production pool `system` in the `filesystems` filter of jobs `snapjob` and `push_to_drive`

# - adjust the name of the backup pool `backuppool` in the `backuppool_sink` job

# - adjust the occurences of `myhostname` to the name of the system you are backing up (cannot be easily changed once you start replicating)

# - make sure the `zrepl_` prefix is not being used by any other zfs tools you might have installed (it likely isn't)

jobs:

# this job takes care of snapshot creation + pruning

- name: snapjob

type: snap

filesystems: {

"lpool/root/gentoo": true,

"lpool/home/gentoo": true,

}

# create snapshots with prefix `zrepl_` every 15 minutes

snapshotting:

type: periodic

interval: 15m

prefix: zrepl_

pruning:

keep:

# fade-out scheme for snapshots starting with `zrepl_`

# - keep all created in the last hour

# - then destroy snapshots such that we keep 24 each 1 hour apart

# - then destroy snapshots such that we keep 14 each 1 day apart

# - then destroy all older snapshots

- type: grid

grid: 1x1h(keep=all) | 24x1h | 14x1d

regex: "^zrepl_.*"

# keep all snapshots that don't have the `zrepl_` prefix

- type: regex

negate: true

regex: "^zrepl_.*"

# This job pushes to the local sink defined in job `backuppool_sink`.

# We trigger replication manually from the command line / udev rules using

# `zrepl signal wakeup push_to_drive`

- type: push

name: push_to_drive

connect:

type: local

listener_name: backup

client_identity: gentsar

filesystems: {

"lpool/root/gentoo": true,

"lpool/home/gentoo": true,

}

send:

encrypted: false

replication:

protection:

initial: guarantee_resumability

# Downgrade protection to guarantee_incremental which uses zfs bookmarks instead of zfs holds.

# Thus, when we yank out the backup drive during replication

# - we might not be able to resume the interrupted replication step because the partially received `to` snapshot of a `from`->`to` step may be pruned any time

# - but in exchange we get back the disk space allocated by `to` when we prune it

# - and because we still have the bookmarks created by `guarantee_incremental`, we can still do incremental replication of `from`->`to2` in the future

incremental: guarantee_incremental

snapshotting:

type: manual

pruning:

# no-op prune rule on sender (keep all snapshots), job `snapshot` takes care of this

keep_sender:

- type: regex

regex: ".*"

# retain

keep_receiver:

# longer retention on the backup drive, we have more space there

- type: grid

grid: 1x1h(keep=all) | 24x1h | 360x1d

regex: "^zrepl_.*"

# retain all non-zrepl snapshots on the backup drive

- type: regex

negate: true

regex: "^zrepl_.*"

# This job receives from job `push_to_drive` into `backuppool/zrepl/sink/myhostname`

- type: sink

name: backup

root_fs: "backup/linux"

serve:

type: local

listener_name: backup

This config create snapshots correctly, but I don’t understand when snapshts are moved on backup pool.

Someone use it?

I use zrepl for backups, including to a removable drive. Snapshots are never ‘moved’, they are copied.

The configuration you have posted requires a manual trigger to cause the push_to_drive job to run. To do that you’d run zrepl status, scroll down to the push_to_drive job, and press S to trigger the job.

1 Like

Thanks so much, tomorrow I’ll try. But if I want to automatized? Can I do it?

Certainly, you can use any tool you like (cron, anacron, systemd timers, etc.) to run zrepl signal to trigger the job. I do this on laptops, triggering the job after the laptop comes out of suspend mode.

1 Like

I’m very stupid. I’ve launch zrepl status and trigger push_to_drive, but I’ve this error:

═╗┌──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

║jobs ║│Job: push_to_drive │

║├──backup ║│Type: push │

║├──push_to_drive ║│ │

║└──snapjob ║│Replication: │

║ ║│ Attempt #1 │

║ ║│ Status: filesystem-error │

║ ║│ Last Run: 2025-01-25 10:40:24 +0100 CET (lasted 0s) │

║ ║│ Problem: one or more of the filesystems encountered errors │

║ ║│ Progress: [>--------------------------------------------------] 9.5 MiB / 46.3 GiB @ 0 B/s │

║ ║│ lpool/home/gentoo STEP-ERROR (step 1/1, 5.5 MiB/13.6 GiB) server error: cannot create placeholder filesystem backup/linux-root/gentsar/lpool: placeholder filesystem encryption handling │

║ ║│ lpool/root/gentoo STEP-ERROR (step 1/1, 4.0 MiB/32.6 GiB) server error: cannot create placeholder filesystem backup/linux-root/gentsar/lpool: placeholder filesystem encryption handling │

║ ║│ │

║ ║│Pruning Sender: │

║ ║│ Status: ExecErr │

║ ║│ Progress: [>] 0/0 snapshots │

║ ║│ lpool/home/gentoo ERROR: replication cursor bookmark does not exist (one successful replication is required before pruning works) │

║ ║│ │

║ ║│ lpool/root/gentoo ERROR: replication cursor bookmark does not exist (one successful replication is required before pruning works) │

║ ║│ │

║ ║│ │

║ ║│Pruning Receiver: │

║ ║│ Status: Done │

║ ║│ nothing to do │

║ ║│ │

║ ║│Snapshotting: │

║ ║│ Type: manual │

║ ║│ <no details available> │

║ ║│

on /var/log/zrepl.log I read this:

2025-01-25T10:51:55+01:00 [ERRO][push_to_drive][pruning][jHmm$EuX7$EuX7.LHnX.wIhD.ABEk]: : plan error, skipping filesystem prune_side="sender" err="replication cursor bookmark does not exist (one successful replication is required before pruning works)" fs="lpool/root/gentoo" orig_err_type="*errors.fundamental"

maybe problems are that my datasets aren’t encrypted. But I don’t understand how using send without encryption

It would probably be best to ask these questions in the zrepl repository or its Matrix channel.

1 Like

this is my zrepl config that pushes encrypted backups to an external usb drive:

[root@endeavour ~]# cat /etc/zrepl/zrepl.yml

# This config serves as an example for a local zrepl installation that

# backups the entire zpool `system` to `backuppool/zrepl/sink`

#

# The requirements covered by this setup are described in the zrepl documentation's

# quick start section which inlines this example.

#

# CUSTOMIZATIONS YOU WILL LIKELY WANT TO APPLY:

# - adjust the name of the production pool `system` in the `filesystems` filter of jobs `snapjob` and `push_to_derive`

# - adjust the name of the backup pool `backuppool` in the `backuppool_sink` job

# - adjust the occurences of `myhostname` to the name of the system you are backing up (cannot be easily changed once you start replicating)

# - make sure the `zrepl_` prefix is not being used by any other zfs tools you might have installed (it likely isn't)

global:

logging:

- type: "stdout"

level: "warn"

format: "human"

- type: "syslog"

level: "warn"

format: "human"

jobs:

# this job takes care of snapshot creation + pruning

- name: snapjob

type: snap

filesystems: {

"rust1<": true,

}

# create snapshots with prefix `zrepl_` every 15 minutes

snapshotting:

type: periodic

interval: 15m

prefix: zrepl_

pruning:

keep:

# fade-out scheme for snapshots starting with `zrepl_`

# - keep all created in the last hour

# - then destroy snapshots such that we keep 24 each 1 hour apart

# - then destroy snapshots such that we keep 14 each 1 day apart

# - then destroy all older snapshots

- type: grid

grid: 1x1h(keep=all) | 24x1h | 14x1d

regex: "^zrepl_.*"

# keep all snapshots that don't have the `zrepl_` prefix

- type: regex

negate: true

regex: "^zrepl_.*"

# This job pushes to the local sink defined in job `backuppool_sink`.

# We trigger replication manually from the command line / udev rules using

# `zrepl signal wakeup push_to_drive`

- type: push

name: push_to_drive

connect:

type: local

listener_name: backuppool_sink

client_identity: endeavour

filesystems: {

"rust1/crypt<": true

}

send:

encrypted: true

replication:

protection:

initial: guarantee_resumability

# Downgrade protection to guarantee_incremental which uses zfs bookmarks instead of zfs holds.

# Thus, when we yank out the backup drive during replication

# - we might not be able to resume the interrupted replication step because the partially received `to` snapshot of a `from`->`to` step may be pruned any time

# - but in exchange we get back the disk space allocated by `to` when we prune it

# - and because we still have the bookmarks created by `guarantee_incremental`, we can still do incremental replication of `from`->`to2` in the future

incremental: guarantee_incremental

snapshotting:

type: manual

pruning:

# no-op prune rule on sender (keep all snapshots), job `snapshot` takes care of this

keep_sender:

- type: regex

regex: ".*"

# retain

keep_receiver:

# longer retention on the backup drive, we have more space there

- type: grid

grid: 1x1h(keep=all) | 24x1h | 360x1d

regex: "^zrepl_.*"

# retain all non-zrepl snapshots on the backup drive

- type: regex

negate: true

regex: "^zrepl_.*"

# This job receives from job `push_to_drive` into `backuppool/zrepl/sink/myhostname`

- type: sink

name: backuppool_sink

root_fs: "zfsbackup/zrepl"

serve:

type: local

listener_name: backuppool_sink

-

just enable the zrepl service

-

Sometimes I check zrepl status & force start the backup job with shift s if the job has errored.

-

You may want to adjust the number of snapshots that are taken (I’ve never needed them & am going to start taking them every 4 hours)

-

If you run LXD you may want to exclude some of those datasets (I just noticed they’ve screwed up my backups for a 2nd time)

Following the “ABC of backups” - I also use Vorta Backup to send backups to Borg Backup on remote storage.

1 Like

Ok I’m trying. This is my zrepl.yml:

marco@tsaroo ~ $ cat /etc/zrepl/zrepl.yml

# This config serves as an example for a local zrepl installation that

# backups the entire zpool `system` to `backuppool/zrepl/sink`

#

# The requirements covered by this setup are described in the zrepl documentation's

# quick start section which inlines this example.

#

# CUSTOMIZATIONS YOU WILL LIKELY WANT TO APPLY:

# - adjust the name of the production pool `system` in the `filesystems` filter of jobs `snapjob` and `push_to_drive`

# - adjust the name of the backup pool `backuppool` in the `backuppool_sink` job

# - adjust the occurences of `myhostname` to the name of the system you are backing up (cannot be easily changed once you start replicating)

# - make sure the `zrepl_` prefix is not being used by any other zfs tools you might have installed (it likely isn't)

global:

logging:

- type: "stdout"

level: "warn"

format: "human"

- type: "syslog"

level: "warn"

format: "human"

jobs:

# this job takes care of snapshot creation + pruning

- name: snapjob

type: snap

filesystems: {

"lpool/root/gentoo-kde-systemd": true,

"lpool/home/gentoo-kde-systemd": true,

}

# create snapshots with prefix `zrepl_` every 15 minutes

snapshotting:

type: periodic

interval: 15m

prefix: zrepl_

pruning:

keep:

# fade-out scheme for snapshots starting with `zrepl_`

# - keep all created in the last hour

# - then destroy snapshots such that we keep 24 each 1 hour apart

# - then destroy snapshots such that we keep 14 each 1 day apart

# - then destroy all older snapshots

- type: grid

grid: 1x1h(keep=all) | 24x1h | 14x1d

regex: "^zrepl_.*"

# keep all snapshots that don't have the `zrepl_` prefix

- type: regex

negate: true

regex: "^zrepl_.*"

# This job pushes to the local sink defined in job `backuppool_sink`.

# We trigger replication manually from the command line / udev rules using

# `zrepl signal wakeup push_to_drive`

- type: push

name: push_to_drive

connect:

type: local

listener_name: backuppool_sink

client_identity: tsaroo

filesystems: {

"lpool/root/gentoo-kde-systemd": true

#"lpool/home/gentoo-kde-systemd": true

}

send:

encrypted: true

replication:

protection:

initial: guarantee_resumability

# Downgrade protection to guarantee_incremental which uses zfs bookmarks instead of zfs holds.

# Thus, when we yank out the backup drive during replication

# - we might not be able to resume the interrupted replication step because the partially received `to` snapshot of a `from`->`to` step may be pruned any time

# - but in exchange we get back the disk space allocated by `to` when we prune it

# - and because we still have the bookmarks created by `guarantee_incremental`, we can still do incremental replication of `from`->`to2` in the future

incremental: guarantee_incremental

snapshotting:

type: manual

pruning:

# no-op prune rule on sender (keep all snapshots), job `snapshot` takes care of this

keep_sender:

- type: regex

regex: ".*"

# retain

keep_receiver:

# longer retention on the backup drive, we have more space there

- type: grid

grid: 1x1h(keep=all) | 24x1h | 360x1d

regex: "^zrepl_.*"

# retain all non-zrepl snapshots on the backup drive

- type: regex

negate: true

regex: "^zrepl_.*"

# This job receives from job `push_to_drive` into `backuppool/zrepl/sink/myhostname`

- type: sink

name: backup

root_fs: "zroot/linux/tsaroo"

serve:

type: local

listener_name: backuppool_sink

when I launch zrepl status I get this:

╔═════════════════════════════════════════════╗┌───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

║jobs ║│Job: push_to_drive │

║├──backup ║│Type: push │

║├──push_to_drive ║│ │

║└──snapjob ║│Replication: │

║ ║│ Attempt #1 │

║ ║│ Status: filesystem-error │

║ ║│ Last Run: 2025-03-15 10:58:05 +0100 CET (lasted 0s) │

║ ║│ Problem: one or more of the filesystems encountered errors │

║ ║│ Progress: [==================================================>] 0 B / 0 B @ 0 B/s │

║ ║│ lpool/root/gentoo-kde-systemd PLANNING-ERROR (step 0/0, 0 B/0 B) validate send arguments: encrypted send mandated by policy, but filesystem "lpool/root/gentoo-kde-systemd" is not encrypted │

║ ║│ │

║ ║│Pruning Sender: │

║ ║│ Status: ExecErr │

║ ║│ Progress: [>] 0/0 snapshots │

║ ║│ lpool/root/gentoo-kde-systemd ERROR: replication cursor bookmark does not exist (one successful replication is required before pruning works) │

║ ║│ │

║ ║│ │

║ ║│Pruning Receiver: │

║ ║│ Status: Done │

║ ║│ nothing to do │

║ ║│ │

║ ║│Snapshotting: │

║ ║│ Type: manual │

║ ║│ <no details available> │

║ ║│

I don’t understand what I wrong. I’m trying to use my second nvme, with freebsd, how disk backup

If i comment line encrypted I get this:

╔═════════════════════════════════════════════╗┌───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

║jobs ║│Job: push_to_drive │

║├──backup ║│Type: push │

║├──push_to_drive ║│ │

║└──snapjob ║│Replication: │

║ ║│ Attempt #1 │

║ ║│ Status: filesystem-error │

║ ║│ Last Run: 2025-03-15 11:01:03 +0100 CET (lasted 0s) │

║ ║│ Problem: one or more of the filesystems encountered errors │

║ ║│ Progress: [>--------------------------------------------------] 7.0 MiB / 44.4 GiB @ 0 B/s │

║ ║│ lpool/root/gentoo-kde-systemd STEP-ERROR (step 1/1, 7.0 MiB/44.4 GiB) server error: cannot create placeholder filesystem zroot/linux/tsaroo/tsaroo/lpool: placeholder filesystem encryption handling is unspecified in receiver config│

║ ║│ │

║ ║│Pruning Sender: │

║ ║│ Status: ExecErr │

║ ║│ Progress: [>] 0/0 snapshots │

║ ║│ lpool/root/gentoo-kde-systemd ERROR: replication cursor bookmark does not exist (one successful replication is required before pruning works) │

║ ║│ │

║ ║│ │

║ ║│Pruning Receiver: │

║ ║│ Status: Done │

║ ║│ nothing to do │

║ ║│ │

║ ║│Snapshotting: │

║ ║│ Type: manual │

║ ║│ <no details available> │

║ ║│

seems to be a bug - try:

encryption: inherit

& if this does not work - do the first replication with off (as you have been doing)

after spending several hours releasing a LOT of zfs holds & destroying all the snapshots - I’m leaning towards trying sanoid - it looks simpler to configure & will probably be less error prone (my LXD datasets have messed things up twice now with zrepl)

I think with any zfs snapshot app - you need to check your zfs holds & exclude datasets held by any other app (like LXD).

Thanks so much, I’m solved first step, now I would like to try to automized this work

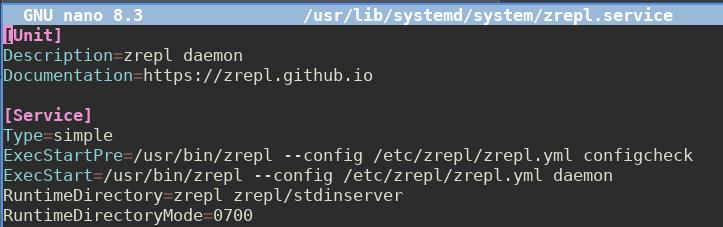

in Linux there is a zrepl systemd service I enable - maybe the FreeBSD maintainers added something similar ? (or just write a script that runs zrepl daemon)

1 Like

Yes, I’m using systemd service on linux and snapshots are automatic, but for send/recv options I must use zrepl status with manual operation

and if is possible I would like to create backup on another external drive. So I have snapshot on my pool and two backup on anothers to disks

Maybe I solved with automatization of send/recv

I think the zrepl service runs whatever jobs are configured in /etc/zrepl/zrepl.yml (it does on my system including the file sync)

1 Like