Thoughts on what the best ZFS layout is for a 45drives XL60 to maximize the available storage space. This will be using 20+ TB rust drives and is one piece of a long term file backup strategy for retention purposes. Once files are copied to it the chance of them being accessed is very minimal and they will never be modified.

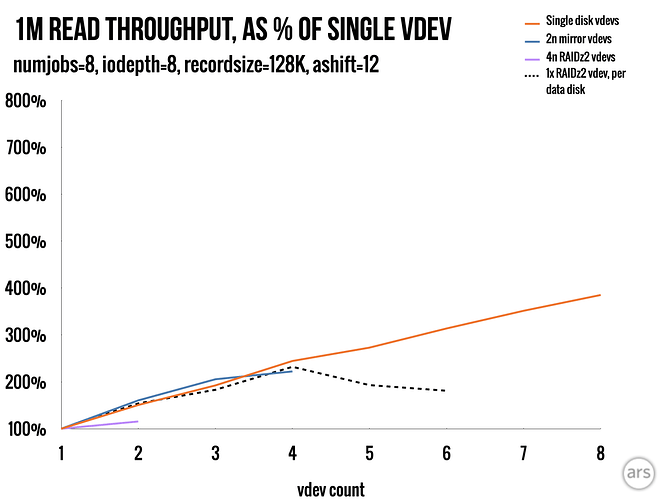

One suggestion is to just go super wide and setup four 15 drive raid-z3 vdevs in the pool with no spares, which should give the largest usable capacity. As super wide vdevs are usually not recommended due to the long rebuild times, do we go with 6 10 wide raid-z3 vdevs instead? This decreases capacity but should increase drive failure tolerance, decrease rebuild time, and increase performance. For this use case, the increase in performance from the extra vdevs is really not needed.

I have no experience yet using DRAID, but would this be a better solution for a system with this many drives? How well is DRAID supported and is it considered mature enough for production use yet? What kind of DRAID layouts would be suggested for this use case?