Preface:

Please know that before creating this post I did my absolute best to not bother you fine folks with this request, scoured the forums, tried some fixes….then tried all the fixes and I am still at the same spot as I started

It should also be noted that I BARELY know what I am doing in comparison to a lot of you, so take that for what it is.

Problem:

I have very slow download with perfect upload speeds on both the host and client machines.

The same problem persists when a NIC is directly passed through to a client machine and is persistent across all VM’s regardless of OS

The host hardware was used previously with no issue

Steps taken so far with no improvement:

Verified full GbE connection between switch and host

Changed NIC and cables

Re-Install of PVE

Directly passed a wireless intel nic to multiple VM’s, same issue

Directly passed a Realtek wired nic to multiple VM’s, same issue

Changed MTU size (its back to 1500 now)

Added pre-up ethtool -K enp39s0 rx off tx off to network interfaces

Added processor.max_cstate=1 to grub

The last three were buried in some forum posts so I tried them.

I also have pasted below some information that was requested in other posts

I know it’s going to be something with the host machine, I just don’t know what…

Any help would be greatly appreciated as I really did try to learn and solve this on my own, however I just don’t think I am quite there yet.

TIA

Host Machine

Ryzen 5600x

48gb DDR4 ram

MSI MPG X570 Mobo

PVE Version

root@proxmox:~# pveversion -v

proxmox-ve: 8.1.0 (running kernel: 6.5.13-5-pve)

pve-manager: 8.1.10 (running version: 8.1.10/4b06efb5db453f29)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.5.13-5-pve-signed: 6.5.13-5

proxmox-kernel-6.5: 6.5.13-5

proxmox-kernel-6.5.11-8-pve-signed: 6.5.11-8

ceph-fuse: 17.2.7-pve2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.3

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.5

libpve-cluster-perl: 8.0.5

libpve-common-perl: 8.1.1

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.6

libpve-network-perl: 0.9.6

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.1.5

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.1.5-1

proxmox-backup-file-restore: 3.1.5-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.5

proxmox-widget-toolkit: 4.1.5

pve-cluster: 8.0.5

pve-container: 5.0.9

pve-docs: 8.1.5

pve-edk2-firmware: 4.2023.08-4

pve-firewall: 5.0.3

pve-firmware: 3.11-1

pve-ha-manager: 4.0.3

pve-i18n: 3.2.1

pve-qemu-kvm: 8.1.5-4

pve-xtermjs: 5.3.0-3

qemu-server: 8.1.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.3-pve

grub

#If you change this file, run ‘update-grub’ afterwards to update

#/boot/grub/grub.cfg.

#For full documentation of the options in this file, see:

#info -f grub -n ‘Simple configuration’GRUB_DEFAULT=0

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR=lsb_release -i -s 2> /dev/null || echo Debian

GRUB_CMDLINE_LINUX_DEFAULT=“quiet amd_iommu=on processor.max_cstate=1”

GRUB_CMDLINE_LINUX=“”#If your computer has multiple operating systems installed, then you

#probably want to run os-prober. However, if your computer is a host

#for guest OSes installed via LVM or raw disk devices, running

#os-prober can cause damage to those guest OSes as it mounts

#filesystems to look for things.

#GRUB_DISABLE_OS_PROBER=false#Uncomment to enable BadRAM filtering, modify to suit your needs

#This works with Linux (no patch required) and with any kernel that obtains

#the memory map information from GRUB (GNU Mach, kernel of FreeBSD …)

#GRUB_BADRAM=“0x01234567,0xfefefefe,0x89abcdef,0xefefefef”#Uncomment to disable graphical terminal

#GRUB_TERMINAL=console#The resolution used on graphical terminal

#note that you can use only modes which your graphic card supports via VBE

#you can see them in real GRUB with the command `vbeinfo’

#GRUB_GFXMODE=640x480#Uncomment if you don’t want GRUB to pass “root=UUID=xxx” parameter to Linux

#GRUB_DISABLE_LINUX_UUID=true#Uncomment to disable generation of recovery mode menu entries

#GRUB_DISABLE_RECOVERY=“true”#Uncomment to get a beep at grub start

#GRUB_INIT_TUNE=“480 440 1”

ethtool

root@proxmox:~# ethtool enp39s0

Settings for enp39s0:

Supported ports: [ TP MII ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: Symmetric Receive-only

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Link partner advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Link partner advertised pause frame use: No

Link partner advertised auto-negotiation: Yes

Link partner advertised FEC modes: Not reported

Speed: 1000Mb/s

Duplex: Full

Auto-negotiation: on

master-slave cfg: preferred slave

master-slave status: slave

Port: Twisted Pair

PHYAD: 0

Transceiver: external

MDI-X: Unknown

Supports Wake-on: pumbg

Wake-on: d

Link detected: yes

etc/network/interfaces

#network interface settings; autogenerated

#Please do NOT modify this file directly, unless you know what

#you’re doing.#If you want to manage parts of the network configuration manually,

#please utilize the ‘source’ or ‘source-directory’ directives to do

#so.

#PVE will preserve these directives, but will NOT read its network

#configuration from sourced files, so do not attempt to move any of the PVE managed interfaces into external files!auto lo

iface lo inet loopbackiface enp39s0 inet manual

pre-up ethtool -K enp39s0 rx off tx off

iface wlp41s0 inet manualiface enp41s0 inet manual

auto vmbr0

iface vmbr0 inet static

address 192.168.1.200/24

gateway 192.168.1.1

bridge-ports enp39s0

bridge-stp off

bridge-fd 0source /etc/network/interfaces.d/

Iperf from physical machine on the network to host

accepted connection from 192.168.1.200, port 45062

[ 5] local 192.168.1.213 port 5201 connected to 192.168.1.200 port 45068

[ ID] Interval Transfer Bandwidth

[ 5] 0.00-1.00 sec 10.6 MBytes 89.2 Mbits/sec

[ 5] 1.00-2.00 sec 11.0 MBytes 92.3 Mbits/sec

[ 5] 2.00-3.00 sec 11.0 MBytes 92.3 Mbits/sec

[ 5] 3.00-4.00 sec 11.0 MBytes 92.4 Mbits/sec

[ 5] 4.00-5.00 sec 11.0 MBytes 92.2 Mbits/sec

[ 5] 5.00-6.00 sec 11.0 MBytes 92.4 Mbits/sec

[ 5] 6.00-7.00 sec 11.0 MBytes 92.2 Mbits/sec

[ 5] 7.00-8.00 sec 10.7 MBytes 89.8 Mbits/sec

[ 5] 8.00-9.00 sec 11.0 MBytes 92.4 Mbits/sec

[ 5] 9.00-10.00 sec 11.0 MBytes 92.5 Mbits/sec

[ 5] 10.00-10.05 sec 593 KBytes 92.7 Mbits/sec

[ ID] Interval Transfer Bandwidth

[ 5] 0.00-10.05 sec 0.00 Bytes 0.00 bits/sec sender

[ 5] 0.00-10.05 sec 110 MBytes 91.8 Mbits/sec receiver

Iperf from host to physical machine on the network

Connecting to host 192.168.1.213, port 5201

[ 5] local 192.168.1.200 port 45068 connected to 192.168.1.213 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 12.0 MBytes 101 Mbits/sec 0 220 KBytes

[ 5] 1.00-2.00 sec 11.0 MBytes 92.4 Mbits/sec 0 220 KBytes

[ 5] 2.00-3.00 sec 11.0 MBytes 92.4 Mbits/sec 0 220 KBytes

[ 5] 3.00-4.00 sec 11.0 MBytes 92.4 Mbits/sec 0 220 KBytes

[ 5] 4.00-5.00 sec 11.0 MBytes 92.4 Mbits/sec 0 220 KBytes

[ 5] 5.00-6.00 sec 11.0 MBytes 92.4 Mbits/sec 0 220 KBytes

[ 5] 6.00-7.00 sec 11.0 MBytes 92.4 Mbits/sec 0 220 KBytes

[ 5] 7.00-8.00 sec 10.6 MBytes 88.9 Mbits/sec 0 220 KBytes

[ 5] 8.00-9.00 sec 11.0 MBytes 92.4 Mbits/sec 0 220 KBytes

[ 5] 9.00-10.00 sec 11.0 MBytes 92.4 Mbits/sec 0 220 KBytes

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 111 MBytes 92.9 Mbits/sec 0 sender

[ 5] 0.00-10.00 sec 110 MBytes 92.3 Mbits/sec receiver

Speedtest on host

Retrieving speedtest.net configuration…

Testing from…

Retrieving speedtest.net server list…

Selecting best server based on ping…

Hosted…: 28.825 ms

Testing download speed…

Download: 14.91 Mbit/s

Testing upload speed…

Upload: 52.62 Mbit/s

Speedtest on guest

root@pihole:~# speedtest

Speedtest by Ookla

Idle Latency: 16.24 ms (jitter: 2.05ms, low: 13.47ms, high: 19.51ms)

Download: 18.35 Mbps (data used: 13.4 MB)

22.55 ms (jitter: 31.94ms, low: 5.67ms, high: 262.15ms)

Upload: 52.00 Mbps (data used: 26.8 MB)

16.16 ms (jitter: 11.94ms, low: 7.81ms, high: 172.51ms)

Packet Loss: 0.0%

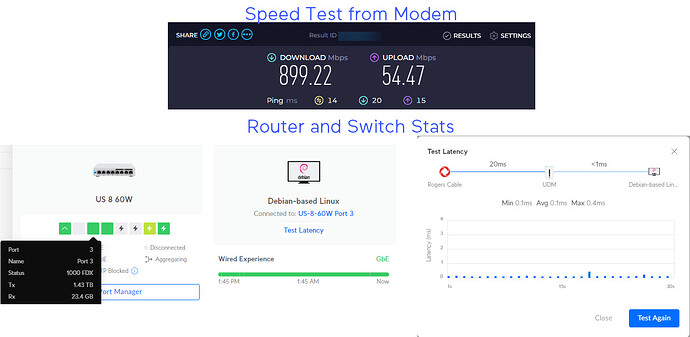

Speedtest from Router and Switch Stats