Has anybody used Immich with ZFS? I don’t know if ZFS is the problem but I’m having issues since switching to it.

On my Debian 12 machine using Yunohost, I was running Immich through docker for about 2 years. The photos were stored in a mdadm RAID10 with ext4 fileystem. I never had problems.

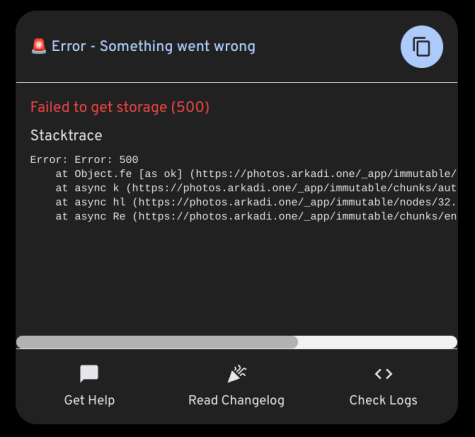

In the past week or so, I switched to ZFS and I am having issues with Immich not being able to find the storage. If I poke the ZFS storage, suddenly it finds the files.

- Couldn’t find thumbnails

- I changed a docker environment variable from /mnt/hermes to /mnt/hermes/ and it worked

- After about 7 days, I am having the same issue

- I thought the problem was fixed, but it isn’t.

Now, what seems to be the problem?

- Are ZFS drives going to sleep?

- Do I need to adjust settings in Debian or Docker?

- Maybe is this an Immich problem?

Details on Server

four internal 3.5 inch drives, not in usb enclosures

pool: hermes

state: ONLINE

scan: resilvered 692K in 00:00:01 with 0 errors on Sat Aug 17 22:00:15 2024

config:

NAME STATE READ WRITE CKSUM

hermes ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-TOSHIBA_HDWD130_82VGAX9AS ONLINE 0 0 0

ata-TOSHIBA_DT01ABA300V_80VZ0X5AS ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

ata-TOSHIBA_DT01ABA300V_80VZ0VHAS ONLINE 0 0 0

ata-ST3000DM007-1WY10G_ZFN38ALM ONLINE 0 0 0

errors: No known data errors

docker is pointing to /mnt/hermes/immich-photos

$ zfs list

NAME USED AVAIL REFER MOUNTPOINT

hermes 1.62T 3.69T 128K /mnt/hermes

hermes/immich-photos 349G 3.69T 349G /mnt/hermes/immich-photos

I’d appreciate suggestions or wild speculation. Thank you!