Hey all just a quick little blerb and sanity check. Am I on the right track here?

Block Cloning:

With OpenZFS 2.3/TrueNAS Cobia we now have a really cool new feature called block cloning. What is it? “Basically free” deduplication…but with an asterix.

Nothing is free in life and block cloning has some limitations for when it can work it’s magic.

PREFACE:

I have a fairly elaborate system for ingesting media files. I have a “staging” folder for new media as it is ingested. My staging folder is actually in a separate dataset, which has been my design for years…this was in an attempt to get more granular snapshot retention policies and save on space efficiency by retaining the production files longer than the source/staging files.

I keep these original media files for a period of time, but I do not use them directly.

This media can be from

- a camera

- a DVR

- old VHS tapes captures or DVD rips

- AI Upscaled projects from Topaz

- Internet Archive Torrents

I have scripts which copy them to another dataset and then re-encode them. The “copy script” use Python’s shutil.copy2(file_path, new_file_path) .

Basically, my use case can be thought of both as video production and Plex joined together.

QUESTION:

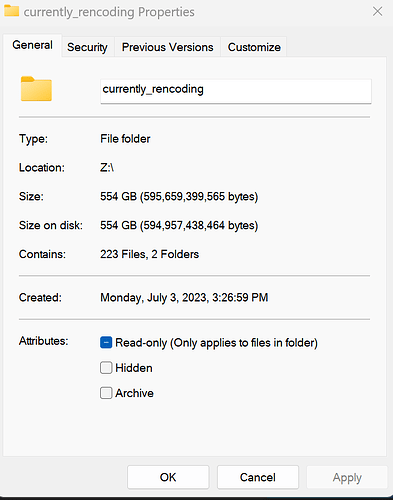

Once the copied files are converted they are deleted. However, since there is some lag time between copying and re-encode, there is always some duplicate data which is stored. Currently my backlog is ~500GiB

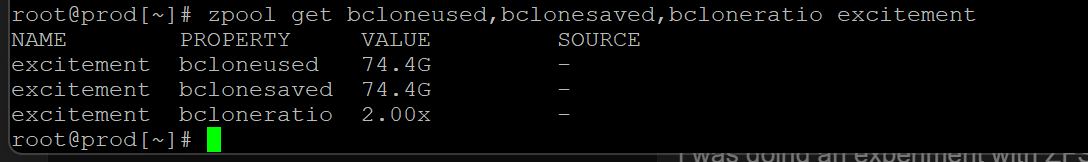

But unfortunately, I do not realize all of these savings from block cloning.

I believe this is because we are crossing the boundaries of the dataset? I’m fairly confident that by moving my ingestion folder from it’s current, separate dataset, into a basic subfolder of the production dataset I will be able to leverage Block Cloning for this workflow. But I am looking for a sanity check ![]() I don’t want to re-engineer things only to find out my understand was wrong lol.

I don’t want to re-engineer things only to find out my understand was wrong lol.

FWIW: I do understand that for this workflow the savings as reported in the BRT would only be temporary because the resultant files from being re-encoded would no longer be copies of the originals. But the end-result is typically a much smaller file than the source, and I do not keep the originals forever. That fact is factored into my overall storage growth plans.

It just sucks that I waste about half a TiB every month or so for no reason, which is why I would love to try to get block cloning to help me out here. Since the production files are written once and never change, I’m fairly confident I can come up with some pretty sane snapshot retention policies which will help continue to realize most of the savings.