Hello. I am in the process of trying out sandod/syncoid after using zfs-autobackup for a while. Sanoid appears to be doing it’s job correctly but I’m having some teething issues with Syncoid.

I am replicating in a push fashion from a server running FreeBSD 14.2-RELEASE to a server running FreeBSD 13.2-RELEASE-p11. They both have the latest version of sanoid installed, so neither end should be missing any dependencies. Note that I have not yet configured sanoid to actually run on the destination yet, so it is not doing anything with snapshots yet.

If I delete the dataset on the destination and run syncoid on the source, the full replication completes successfully:

[root@max ~]# syncoid --sshport=223 zroot/usr/local/etc pricetx@walt.chrisprice.co:zstore/backups/max/usr/local/etc

INFO: Sending oldest full snapshot zroot/usr/local/etc@autosnap_2025-03-11_18:09:56_monthly (~ 1.2 MB) to new target filesystem:

1.37MiB 0:00:00 [11.2MiB/s] [==============================================================================================================================================================================================] 118%

INFO: Updating new target filesystem with incremental zroot/usr/local/etc@autosnap_2025-03-11_18:09:56_monthly ... syncoid_max.jonathanprice.org_2025-03-13:09:32:59-GMT00:00 (~ 814 KB):

847KiB 0:00:00 [1.74MiB/s] [==============================================================================================================================================================================================] 104%

However, if I then run the same command a second time, I would expect it to sync any changes, but instead it throws an error

[root@max ~]# syncoid --sshport=223 zroot/usr/local/etc pricetx@walt.chrisprice.co:zstore/backups/max/usr/local/etc

Sending incremental zroot/usr/local/etc@syncoid_max.jonathanprice.org_2025-03-13:09:32:59-GMT00:00 ... syncoid_max.jonathanprice.org_2025-03-13:09:34:16-GMT00:00 (~ 4 KB):

1.52KiB 0:00:00 [3.93KiB/s] [=======================================================================> ] 38%

CRITICAL ERROR: zfs send -I 'zroot/usr/local/etc'@'syncoid_max.jonathanprice.org_2025-03-13:09:32:59-GMT00:00' 'zroot/usr/local/etc'@'syncoid_max.jonathanprice.org_2025-03-13:09:34:16-GMT00:00' | pv -p -t -e -r -b -s 4096 | lzop | mbuffer -q -s 128k -m 16M | ssh -p 223 -S /tmp/syncoid-pricetx@walt.chrisprice.co-1741858455-1153 pricetx@walt.chrisprice.co ' mbuffer -q -s 128k -m 16M | lzop -dfc | sudo zfs receive -s -F '"'"'zstore/backups/max/usr/local/etc'"'"' 2>&1' failed: 512 at /usr/local/bin/syncoid line 889.

Confusingly, if I take the zfs command that gets printed in the syncoid error output and run it directly it seems to complete successfully:

[root@max ~]# zfs send -I 'zroot/usr/local/etc'@'syncoid_max.jonathanprice.org_2025-03-13:09:32:59-GMT00:00' 'zroot/usr/local/etc'@'syncoid_max.jonathanprice.org_2025-03-13:09:34:16-GMT00:00' | pv -p -t -e -r -b -s 4096 | lzop | mbuffer -q -s 128k -m 16M | ssh -p 223 -S /tmp/syncoid-pricetx@walt.chrisprice.co-1741858455-1153 pricetx@walt.chrisprice.co ' mbuffer -q -s 128k -m 16M | lzop -dfc | sudo zfs receive -s -F '"'"'zstore/backups/max/usr/local/etc'"'"''

1.52KiB 0:00:00 [2.12KiB/s] [=======================================================================>

The cycle then repeats. If I try running syncoid again it throws the same error (albeit with new snapshot names), and then if I take the printed zfs send/recv command and run it myself it completes successfully.

I’m unsure what error 512, or indeed which application is even raising it.

I tried with other datasets too just to make sure it wasn’t an issue with this particular dataset, but the results are identical:

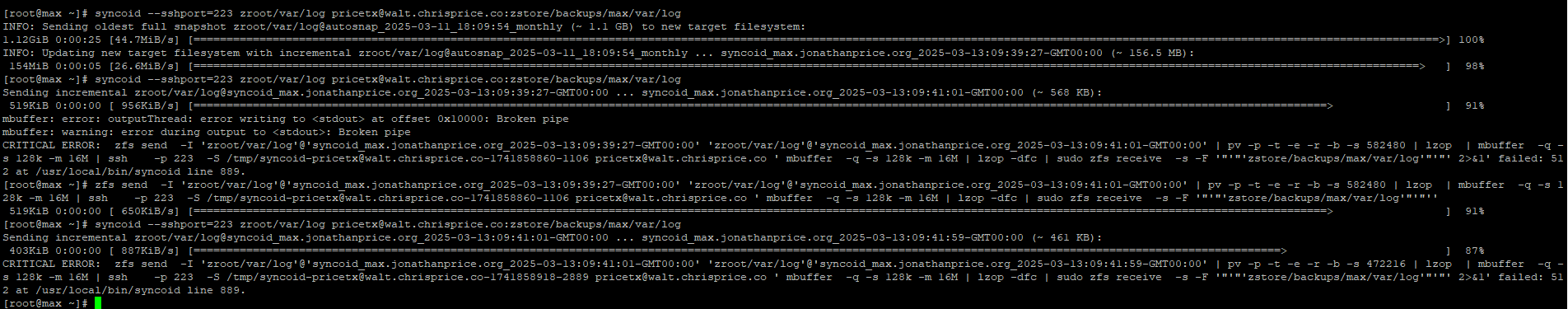

In-line copy of the above screenshot below:

[root@max ~]# syncoid --sshport=223 zroot/var/log pricetx@walt.chrisprice.co:zstore/backups/max/var/log

INFO: Sending oldest full snapshot zroot/var/log@autosnap_2025-03-11_18:09:54_monthly (~ 1.1 GB) to new target filesystem:

1.12GiB 0:00:25 [44.7MiB/s] [=============================================================================================================================================================================================>] 100%

INFO: Updating new target filesystem with incremental zroot/var/log@autosnap_2025-03-11_18:09:54_monthly ... syncoid_max.jonathanprice.org_2025-03-13:09:39:27-GMT00:00 (~ 156.5 MB):

154MiB 0:00:05 [26.6MiB/s] [==========================================================================================================================================================================================> ] 98%

[root@max ~]# syncoid --sshport=223 zroot/var/log pricetx@walt.chrisprice.co:zstore/backups/max/var/log

Sending incremental zroot/var/log@syncoid_max.jonathanprice.org_2025-03-13:09:39:27-GMT00:00 ... syncoid_max.jonathanprice.org_2025-03-13:09:41:01-GMT00:00 (~ 568 KB):

519KiB 0:00:00 [ 956KiB/s] [============================================================================================================================================================================> ] 91%

mbuffer: error: outputThread: error writing to <stdout> at offset 0x10000: Broken pipe

mbuffer: warning: error during output to <stdout>: Broken pipe

CRITICAL ERROR: zfs send -I 'zroot/var/log'@'syncoid_max.jonathanprice.org_2025-03-13:09:39:27-GMT00:00' 'zroot/var/log'@'syncoid_max.jonathanprice.org_2025-03-13:09:41:01-GMT00:00' | pv -p -t -e -r -b -s 582480 | lzop | mbuffer -q -s 128k -m 16M | ssh -p 223 -S /tmp/syncoid-pricetx@walt.chrisprice.co-1741858860-1106 pricetx@walt.chrisprice.co ' mbuffer -q -s 128k -m 16M | lzop -dfc | sudo zfs receive -s -F '"'"'zstore/backups/max/var/log'"'"' 2>&1' failed: 512 at /usr/local/bin/syncoid line 889.

[root@max ~]# zfs send -I 'zroot/var/log'@'syncoid_max.jonathanprice.org_2025-03-13:09:39:27-GMT00:00' 'zroot/var/log'@'syncoid_max.jonathanprice.org_2025-03-13:09:41:01-GMT00:00' | pv -p -t -e -r -b -s 582480 | lzop | mbuffer -q -s 128k -m 16M | ssh -p 223 -S /tmp/syncoid-pricetx@walt.chrisprice.co-1741858860-1106 pricetx@walt.chrisprice.co ' mbuffer -q -s 128k -m 16M | lzop -dfc | sudo zfs receive -s -F '"'"'zstore/backups/max/var/log'"'"''

519KiB 0:00:00 [ 650KiB/s] [============================================================================================================================================================================> ] 91%

[root@max ~]# syncoid --sshport=223 zroot/var/log pricetx@walt.chrisprice.co:zstore/backups/max/var/log Sending incremental zroot/var/log@syncoid_max.jonathanprice.org_2025-03-13:09:41:01-GMT00:00 ... syncoid_max.jonathanprice.org_2025-03-13:09:41:59-GMT00:00 (~ 461 KB):

403KiB 0:00:00 [ 887KiB/s] [=====================================================================================================================================================================> ] 87%

CRITICAL ERROR: zfs send -I 'zroot/var/log'@'syncoid_max.jonathanprice.org_2025-03-13:09:41:01-GMT00:00' 'zroot/var/log'@'syncoid_max.jonathanprice.org_2025-03-13:09:41:59-GMT00:00' | pv -p -t -e -r -b -s 472216 | lzop | mbuffer -q -s 128k -m 16M | ssh -p 223 -S /tmp/syncoid-pricetx@walt.chrisprice.co-1741858918-2889 pricetx@walt.chrisprice.co ' mbuffer -q -s 128k -m 16M | lzop -dfc | sudo zfs receive -s -F '"'"'zstore/backups/max/var/log'"'"' 2>&1' failed: 512 at /usr/local/bin/syncoid line 889.

[root@max ~]#

Does anybody have any suggestions on how I might troubleshoot this further?

Thanks,

Jonathan

EDIT: Apologies, new accounts can only post one image per post, but the last image shows everything mentioned in the previous images anyway.