Warning: first post here from a semi-newb: (apologies in advance…)

I have a dataset that contains a raw vm image where the vm is used for gaming. My problem is that games are so yuuge these days, running syncoid -r to my backup server unintentionally just ate 150GB!

Currently I disable sanoid from automatically snapshotting this dataset. Instead I manually snapshot a baseline configuration where all the game stores are installed & up-to-date but no games are installed. This way I can install a game, play it, then roll back when finished without bloating the local storage system with game-install history. I do want these manually created snapshots to get backed up but not the ‘master’ revision that likely holds an installed game.

Syncoid automatically creates ephemeral snapshots to send the master state, which is what’s wanted for the typical use-cases. The “exclude-snaps” command line argument could be used, but I think there may be a better way.

My idea: would it be cleaner to make sync-snap creation data-driven & per-dataset like how syncoid:sync works? The syncoid:sync dataset parameter concept might be extended to check for a “no-sync-snap” value and if set skip the automatic ephemeral snapshot creation step.

This way by setting this flag my manually-created snapshots would get backed up but not the game sitting in the master revision. I could see this being used in other places, where data-churn-bloat needs to be avoided.

Or I am entirely missing something here?

Forgive me, I don’t fully understanding how your VM works, but I’ll attempt to offer some ideas. I’m guessing that you have a qcow2 file on the dataset?

It seems like the best option would be to have a dataset for where the games are installed and another for where your save files are, and perhaps another that hosts your VM image. Then you could mount those datasets into the VM (either mounting the datasets as directories/disks using the VM configuration or serving them over NFS/SMB, I’d recommend the former if you can get that to work).

One hiccup might occur if the save files and the game install data are colocated in the same directory. But this might be tenable if you use cloud saves or something.

If you have your game installs in a separate dataset, then you can control if/when it will be backed up independent of the rest of your VM.

If you’re whole VM is on a single dataset, there’s not much you can do at the ZFS level. You can control when snapshots and syncs are made/done of course, you could automate that with the scripts if you wanted, but if you wanted zfs to work for you in this instance, the best way would be to figure out how to separate the bits that you want to sync from the bits that you don’t want to sync (or want to sync at a different cadence).

Example.

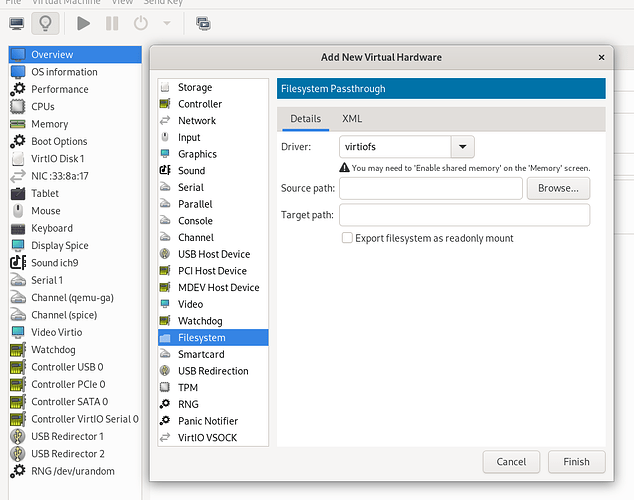

Create a dataset for your game installs. Either make it a normal dataset (probably preferred) or a zvol. Keep a dataset for your VM image. If your games are installed on Linux and you’re using steam, then you could copy the contents of your ~/.local/share/Steam dir to the game install dataset. Then mount the game install dataset to ~/.local/share/Steam inside the VM. If you’re using virt manager, you can select ‘add Hardware’ select ‘Filesystem’ and select the mount point of the game install dataset on your host (say /tank/games) as source and ~/.local/share/Steam as your target. If you use a zvol for your Game Install dataset, then you’d select ‘add hardware’ → ‘Storage’ and mount it as a disk in your VM, then add a mount to your /etc/fstab to mount it at /home/YOURUSER/.local/share/Steam

If your VM is Windows and/or you’re using a different virutalization suite, then same thing should be possible, but I wouldn’t know how to do it.