I’m very new to ZFS, so bear with me as my wording may be incorrect…

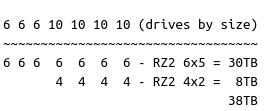

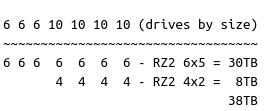

I have 3x 6TB drives and 4x 10TB drives that I would like to make full use of under ZFS. Any reason I couldn’t use a layout like below where the 10TB drives are partitioned into a mix of 6TB and 4TB? Once partitioned, could I use all of the 6TB drives and partitions in one RAID-Z2 zdev, with the remaining 4TB partitions in another RAID-Z2 zdev then combine the two zdevs into a single zpool for a combined 38TB?

Is there a more sane way to utilize the full capacity of all seven drives?

The performance will be terrible and it’ll be hard to manage over the long term.

There is no entirely clean way to make use of those drives, but I would probably do a single 3-wide raidz1 on the 6T drives and two 2-wide mirrors on the 4T drives, if I was determined to use them all as is.

With that said: have you got your backup sorted? You need to have backup.

Another option would be three 2-wide mirrors plus one spare. The third 6T drive is the spare.

That gives you 6T+4T+4T == 14T usable, vs the raidz1 plus mirrors from the last post giving you 12T+4T+4T == 20T usable.

So yes, this way is lower capacity, but it’s higher performance, FAR cleaner, and gives you the chance to grow into it with larger drive replacements in small batches as you go.

Can you buy an 8th drive? How write-intensive will this be?

I have 2 mirror vdevs (thus 4x drives); one of the vdevs has mismatched disk sizes and the vdevs themselves are different sizes. It’s sub-optimal but not the end of the world. ZFS keeps them busy enough and the combo outperforms the more OCD-compatible solution of separate 2-drive zpools. All of this would readily saturate a 1Gb or a 2.5Gb network connection.

If I had your combo I’d buy the last drive a companion and do four mirrors of 10+10, 10+10, 6+6, and 6+x. Put all four vdevs in a single zpool and get on with life. As drives fail you can worry about converging on some common size. Don’t let the perfect be the enemy of the good (or some such).

Honestly, I have two weeks left on a trial of UNRAID, but I’m looking very hard at TrueNAS. As for backups, I plan to backup to an old 6 bay QNAP NAS, but nothing is in place yet.

My needs are pretty simple… Home use for storing a bunch of pics and videos, some software, a bunch of containers running home automation, DNS, DHCP, local wiki, etc, and simple file sharing for two PCs. I’m more concerned about getting as much storage space as possible out of the hardware I have with the peace of mind ZFS offers. Performance isn’t really a concern, but at the same time I don’t want the pool to be unusable because of poor performance.

I realize my proposed scenario isn’t ideal, but for my needs, is it a doable solution? Would it bring ZFS to its knees at some point with two zpools on 4 of the disks? I’m all for, “just trying it”, but I don’t know what I don’t know yet.

“Don’t let the perfect be the enemy of the good (or some such).”

is kind of where I’m at. I could just do away with the 6TB drives, but hey, “Use 'em if you got 'em” or something like that ;).

I only have a 1Gbps NIC at the moment on the box in question, which is good enough for now. Some year down the road I may add a 10Gbps NIC between it and my desktop to transfer edited videos, but that’s just a pipe dream ATM.

Again, I really don’t recommend your original plan of action with multiple pools on the same drives partitioned away. Yes it will “work,” no it is not the best way to make use of those drives. Or even the middling way to use them. It’s a terrible way to use them.

You can buy another drive and do all mirror vdevs as adaptive_chance suggested, or you can do one RAIDz1 on three of them and two mirrors as I suggested, or you can do three mirrors plus a hotspare as I suggested.

Or you can partition the drives and do two weird pools. Nobody will stop you. It will work. But it’s a very bad idea and I do not recommend doing it.

Citatblok @adaptive_chance

one of the vdevs has mismatched disk sizes

I plan to do this as a design choice - that is when planning the pool upgrade path, changing one drive every x months getting a slightly larger drive each time. That way autoexpand of the pool will be available with every upgrade, even with larger vdevs e.g. 6 or 8 drive RAIDZ2s.

I don’t really have a plan for mine. It started as a 4+4 and a 3+4 mirror, all in one pool. Not so bad…

Then the 4TB in the 3+4 mirror failed. I go drive shopping. I’m a guy who will give up 5xx0rpm when they pry it from my cold, dead fingers. The pickings are slim but I landed on a WDC Blue. CMR, 8TB, 5640rpm, and there’s even a newer variant that runs a cooler and faster. Likely reduced platter count. Boom, ordered.

I wanted to block-off space to make future replacement easier (in case the hypothetical future replacement drive is a bit smaller) but this WDC Blue doesn’t support DCO nor HPA. So I made a 5TB ZFS partition and consumed the rest with an unformatted Linux partition. Added said ZFS partition to the zpool.

So the end result is a 4+4 vdev driven to 100% active on a regular basis while the 3+8 vdev has a somewhat less busy 3TB plus a short-stroked 8TB that’s loafing along at mostly <50% active. The obvious next step is replace the 3TB with a matching 8TB WDC Blue which would yield a nice bump in both space and overall zpool performance.

For now I don’t need the space. Besides, who can predict the HDD landscape in 6-24 months? There could be a 10, 12 or 14TB on the market cheap enough to forget about the 8TB. Or one of my 4TB units in the other vdev could fail in the interim.

Anyway, my point in this rambling is I don’t worry too much about max performance and max space. I’m more interested in optimizing ARC/L2ARC knobs to keep the IOPs away from the spinners. I could get matchy-matchy OCD with my drives and ensure every TB is utilized but wind up overpaying for drives on a per-TB basis. Or I could retire odd-sized drives early and pay for replacements that I don’t really need. Any of these three approaches incur some kind of cost…