so I wanted to move my home dataset to my new pool.

I snapshot and replicated that data set to the new pool.

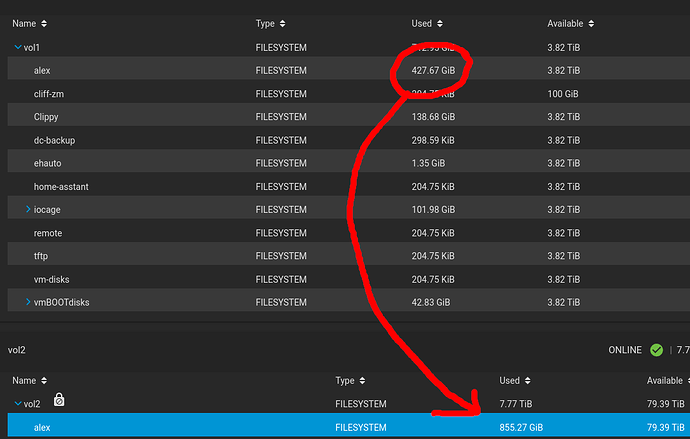

the replication task said complete when the two dataset sizes were the same. but the disks LEDs kept blinking… “hu?” so i checked and yep reporting showed loads of disk activity. And the activity didn’t stop until the new dataset was twice the size of the old

please help me understand whats going on

1 Like

Is compression enabled on the source but not destination, this is easily explained. What is the value of compression and compressratio?

If the source uses 512 byte blocks and the destination uses 4 KiB blocks (ashift 9 vs 12) small files, the tail of lots of files, compressed blocks, etc. will take up to 8x more space.

If the vdev layout is different (raidz vs mirror, etc) you could see inflation due to skip blocks.

1 Like

This smells like a combination of different ashift and different topology, from here.

someone on reddit was able to help. turns out the replication task made a rouge snapshot of the dataset that was taking up its own space

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD

vol2/alex 79.4T 855G 428G 428G 0B 0B

vol2/alex@auto-2024-01-31_18-45 - 428G - - -

after deleting that snap all was fine

2 Likes

ahhhhh, bitten by the zvol refreservation issue.

This is one of many reasons I really dislike zvols. By default, you have referservation=100%, which means that any snapshot of a zvol requires the entire space of the zvol to be reserved. (This is to keep from having an ENOSPC due running out of underlying storage for the zvol, as it diverges from its snapshots and they diverge from one another.)

You can set refreservation to a smaller value, in order to be able to realistically keep snapshots of your zvols. Or you can use a different backing topology, eg a sparse file on a dataset instead of a zvol. I’d strongly recommend doing one of the above, at least, because if you aren’t taking regular snapshots, you’re missing out on (several) AMAZING features of ZFS.

1 Like

![]()