EDIT: The data is back after a long scrub and a reboot, see last reply, not idea what happened. Any ideas? How to prevent this?

Hi all,

This morning I found my server unresponsive (a NixOS install with Gnome, because I sometimes use it as a desktop). It’s likely that leaving Firefox with many tabs open somehow filled the memory and it ground to a halt.

I hard to hard-reset the server, when it came back online I found my zpool mirror empty, whereas before it contained about 800 GBs of data.

It does say that it is scrubbing:

[freek@trantor:/zpool0/data1]$ df -h .

Filesystem Size Used Avail Use% Mounted on

zpool0/data1 2,8T 128K 2,8T 1% /zpool0/data1

[freek@trantor:/zpool0/data1]$ zpool status -v

pool: zpool0

state: ONLINE

scan: scrub in progress since Tue Oct 1 01:09:34 2024

771G / 771G scanned, 207G / 771G issued at 137M/s

0B repaired, 26.79% done, 01:10:18 to go

config:

NAME STATE READ WRITE CKSUM

zpool0 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

wwn-0x50014ee26604ad95 ONLINE 0 0 0

wwn-0x50014ee2605e147a ONLINE 0 0 0

errors: No known data errors

The whole process is moving much slower than any previous scrubs I did manually (I did set it to weekly scrubs in my Nix configuration file).

I haven’t made any snapshots yet, I was going to try that next (this data was just for testing ZFS out, nothing has been really lost btw).

Should I just wait for the scrub to finish? Is data normally unavailable during scrubbing? I searched for this but didn’t find anything. But I’m a total noob, it’s my first attempt at using ZFS.

Edit: Maybe I deleted data myself while my screen was off? (Highly unlikely) maybe my disks renamed (since I used sda/sdb as names)? Is there some kind of log I can check to see the last actions on the disk?

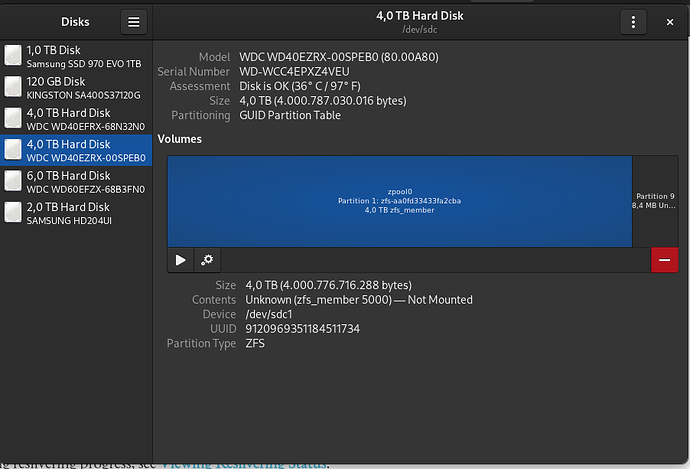

Gnome disks reports both disks as unmounted btw, is that normal?

Sorry, new users can only add 1 image.